An Introduction

Cameron was originally conceived as a demonstration project for the launch of the Intel Edison micro-controller at the 2014 Intel Developer Forum. I was selected from a group of Intel Employees to build something “cool” with Edison. The first version of Cameron, pictured above, used 106 discrete LEDs along with 4 hobby servos to independently control his eyes. Each eye featured a web cam. Cameron would look around using OpenCV pick out a large area of color and then display that color on his shell.

Concepts Demonstrated

Servos

Arduino I/O

Processing, Python, C/C++

Computer Vision

Intel Edison

Adafruit NeoPixel

Discrete LEDs

Early Work

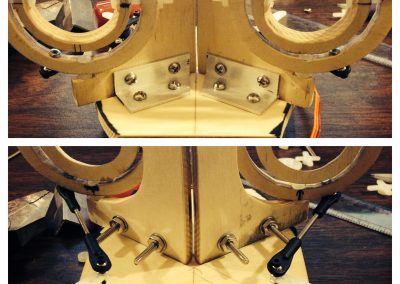

Cameron started out as a hand-drawn sketch in my sketchbook. His head was fashioned from a block of floral foam. The eye gimbals were hand cut from some thin plywood and connected via vinyl screws and springs. The body was crafted using a salad bowl, and some bits of snake print cloth. It was a fun build. See the images to the right.

What’s the Goal?

Although this was a whimsical robot, it met the objective of demoing the Intel Edison, was one of the first projects built with Edison, and was mentioned in Engadget (shown in the gallery above). I also got a chance to deepen my understanding and appreciation for Robotics, computer vision, micro-controllers, and what it might take to build a product. I recently completed a rebuild of Cameron, substituting the discrete LEDs for NeoPixels controlled by a FadeCandy controller. I also switched out the Intel Edison for a Raspberry Pi 3B and updated the program to allow the cameras to independently track faces. I also updated the algorithm used to select a color and display it on Cameron’s body.

Let me know if you have any questions. mwalker@icecodeatl.com

Servos

The servos used in Cameron are low cost hobby servos, one of the challenges with using this type of servo is there is no feedback to the controller. Specifically the controller doesn’t actually know what location the servos are in. A controller can only tell it to go to a certain location. Because the eyes, in follow mode, want to center the face in the camera, it’s importance to keep track of the location of each servo.There are a number of ways to approximate feedback data, but it can make certain designs a challenge. In this project, I simply center the eyes on each start and synchronize the location in the software.

Arduino I/O

The Intel Edison uses Arduino I/O (with a few exceptions) to communicate with the outside world (ie control servos, and input sensors). At the time of this design, many/most controllers were discrete I/O based as opposed to I2C, this was a challenge to connect all the LED Drivers and 4 servos. I was able to creatively work around this limitation.

Processing,Python, C, C++

Much of the code for Cameron was done in Processing, in the Edison’s Arduino mode. This made things a bit easier to control the servos, but the Computer Vision portion had to be done in C/C++. Until all of the code converged in Python on the Raspberry Pi, the eyes randomly looked around at intervals set in the code.

Computer Vision

Initially, computer vision was used to determine a predominant color in images from the webcam. In a later revision of Cameron, the servos, were able to track a person’s face and determine the color of their shirt. Cameron would take the color of the onlooker’s shirt, and display that color on its body.

Intel Edison

Originally, Cameron was powered by a single Intel Edison.

Adafruit NeoPixel

This was a huge improvement over the discrete LED design! In general, using NeoPixels was much more flexible and easier to program. This was initially implemented on the Intel Edison version and later updated with the Raspberry Pi based version.

Discrete LEDs

This was the most time consuming portion of the design. using over 100 discrete LEDs to illuminate Cameron’s body was, at the time, the easiest way to get a multi-color display cheaply and shaped the way I wanted it. It turns out that it took over 24 hours to wirewrap each of the 4 individual LED pins and connect them to the correct node. Comparatively, the NeoPixel design uses 3 wires to control 64 LEDs.